Analyzing Land Use Change

Land Use dataset This project uses the 10m Annual Land Use Land Cover (9-class) dataset which is a joint project between ESRI & Impact Observatory. I chose to use the version hosted on the …

I recently had the chance to build something in the Atlas B2, a cool space that has multiple projectors, mocap, lights, and 40-speaker surround sound.

I’d done a number of cool projects in p5.js for Cacheflowe’s Creative Code class, but since that only runs in a browser I’d be forced to figure out Processing instead. Fortunately I spent many years as a java devloper and it’s only gotten better in the intervening decade.

Since I only got two hours in the space, I tried to figure out a solid plan of attack first. Here’s what I settled on:

Let’s do the fun bit first, here’s the demo. Many thanks to Brad for working with me on getting all the fiddly bits connected quickly.

You can check out the github repository and it should be reasonably self explanatory.

I create a syphon canvas as follows:

syphonCanvas = createGraphics(commandLineWidth, commandLineHeight, P2D);

server = new SyphonServer(this, "FreeSwim1");

and when I’m done with each fream I can offload it with

syphonCanvas.endDraw();

server.sendImage(syphonCanvas);```

This shows up magically as a source in Resolume.

Receiving OSC packets was also pretty easy in java, i pass `this` in and make the default class implement the `OscEventListener` inteface:

```java

oscP5 = new OscP5(this, oscport);

@Override

public void oscEvent(OscMessage message) {

for (int i = 0; i < emitters.length; i++) {\

// check if the OSC path matches a simple pattern

String emitterPattern = "/emitters/" + i;

if (message.checkAddrPattern(emitterPattern + "/x")) {

// parse the coordinate out of the code

float value = message.get(0).floatValue();

if (emitters[i] != null) {

emitters[i].x = value * width;

}

}

if (message.checkAddrPattern(emitterPattern + "/y")) {

float value = message.get(0).floatValue();

if (emitters[i] != null) {

emitters[i].y = value * height;

}

}

}

}

The build is set up to use gradle and also builds automatically from my repository using a github action. This is accomplished by the .github/workflows/gradle-publish.yml configuration. Each time a change is made in the code, github automaticaly spins up a virtual machine and builds a new version of the project. I use the shadowJar target to build a jar file with (almost) all the dependencies included in it. I did have to copy the syphon JNI bindings in the same directory as this jar file and didn’t have time to troubleshoot that step.

- name: Build with Gradle

uses: gradle/gradle-build-action@bd5760595778326ba7f1441bcf7e88b49de61a25 # v2.6.0

with:

arguments: shadowJar

- name: Get current date and time in Denver

id: date

run: echo "::set-output name=datetime::$(TZ="America/Denver" date +'%Y-%m-%d-%H-%M-%S')"

shell: bash

- name: Upload artifact

uses: actions/upload-artifact@v2

with:

name: freeswim-${{ steps.date.outputs.datetime }}.jar

path: app/build/libs/*.jar

The automatic build process helped me iterate quicker as i could easily make changes to the code on my laptop (while working in the B2) and a few secnonds later the built jar file would be available for download.

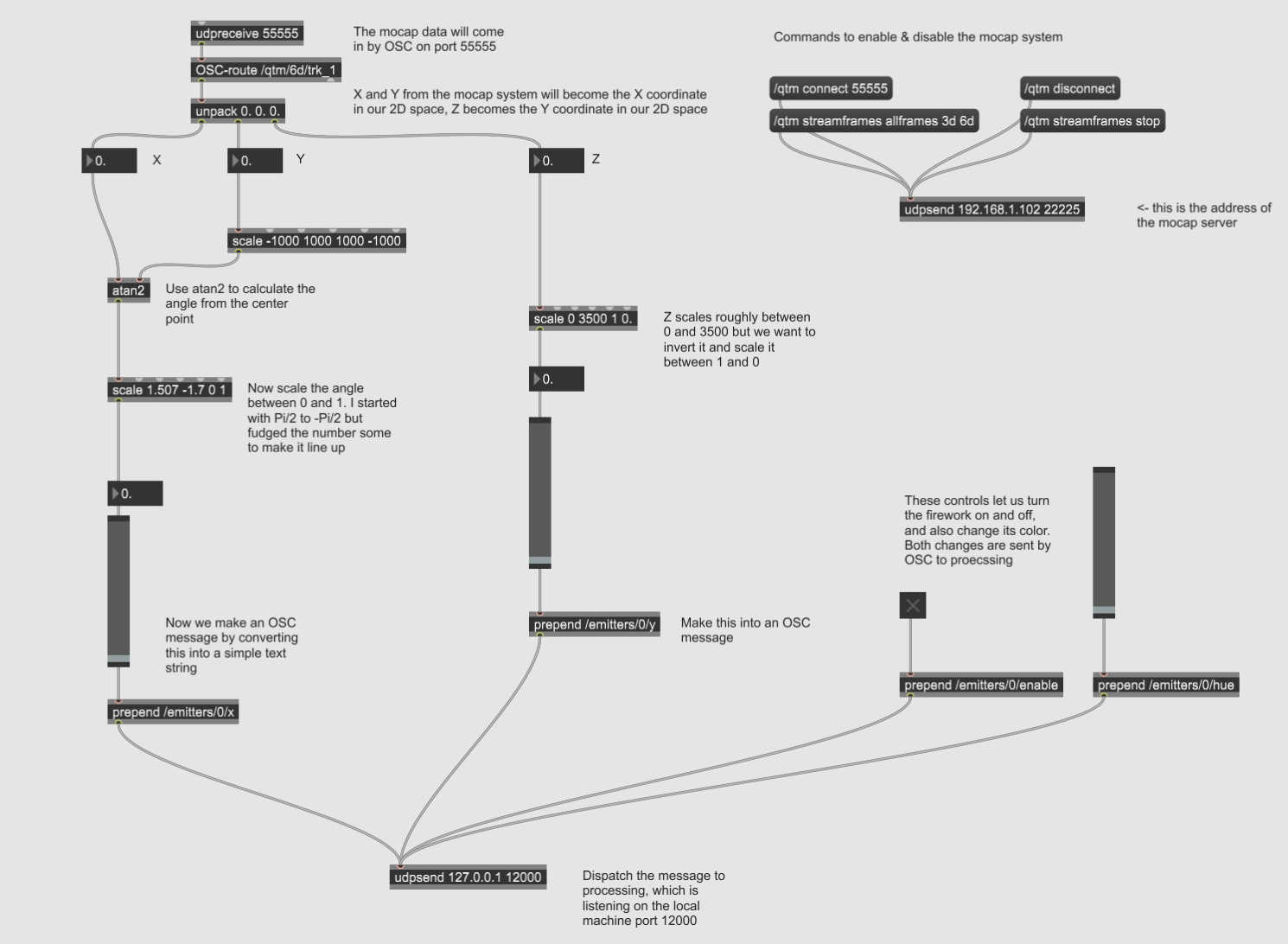

I used a Max Patch to do all the glue logic between the different parts of the system. This receives the mocap data, transforms it suitably and then sends all that data to my processing application. I have Max listening on port 55555 for data, and processing listening on 12000.

The source file is in github, but you can see how it looks here:

Note the atan2 configuration to convert the position of a mocap tracker to an angle (in radians) from the center of the space.

I look forward to getting more time in the space to actually build something real. The 2-hour slot I was given was pretty fun for building a little demo, but I’d have been lost without the generous help of Brad Gallagher.

Some large-scale dataviz project is really appealing to me, but I’d need to find a way to drive the display at full resolution and frame rate before getting too far into it.